Scaling Exadata Cloud Service X8M Compute

Following my last week post on how to provision Exadata Cloud X8M service, I'm going further this week with scaling the infrastructure with more compute nodes. Until now scaling an existing X6, X7 or X8 systems in OCI would require moving the system to a different fixed shape configuration. For X8M however, the flexible shape allows you to add more compute nodes or storage cells when you need them.

Perhaps I should have mentioned in the earlier post but due to cloud and on-premises terminology the following are used interchangeably:

- Database server, compute nodes, virtual machines - are the servers where Oracle database is running.

- Storage servers, storage cells - as the name suggest these are the servers holding all disks drives and storing your data.

When you provision X8M cloud service you will always have 2 compute nodes and 3 storage servers. Additional compute nodes can be added online through the console with no downtime. Then you can scale the compute node processing power within the provisioned system, adding or subtracting CPU cores as needed. The increment of CPU cores is in multiples of the number of database servers currently provisioned for the cloud VM cluster. For example, if you have 4 database servers provisioned, you can add CPU cores in multiples of 4.

Currently, the system can scale up to 32 compute nodes or about 1600 OCPUs. That's a lot, I'm finding it hard sometimes to manage a full rack with 8 compute nodes let alone 32. On the other hand, if you want to easily scale up and down your services and/or move them to different nodes that would be very easy. One thing makes me wonder - how long will Grid Infrastructure patching take. The same is performed in a rolling fashion, with only one node being upgraded at a time. It takes nearly one hour for GI patching to complete on one node and arranging 24-32 hours of downtime might be hard if not impossible for some customers.

Having this option to easily scale up to 32 compute nodes is great but mind the following note from Oracle:

The Exadata X8M shape does not support removing storage or database servers from an existing X8M instance.

It's a shame this feature is not supported but I think it's only a matter of time to see it coming. With X8M elasticity in the cloud it makes easier for customers to start small and grow big and not spending fortune in the beginning. What would make this offer more appealing is the support of removing of compute node and storage servers. There could be different reasons for that, say for example business need to handle the peak sales of Black Friday or there is end of year reports that need to complete within a given time frame. It would be great to scale out the infrastructure to handle the demand and then scale down again. Well that's not supported today but hopefully we will see soon as well as having more than one VM Cluster on the same infrastructure.

Back in days at E-DBA (Red Stack) I had to do many different upgrades over the years. Adding compute nodes or storage cells was something I had to do many times. That would usually take two days - one at the data center and one remote. I had to do all stacking and racking, arrange and connect all the cables, re-image the nodes on the first day and then add them to the cluster or ASM on the second day. That's only my time but there is also procurement, delivery, storage and paperwork like arranging access. Here is how you do it in the cloud.

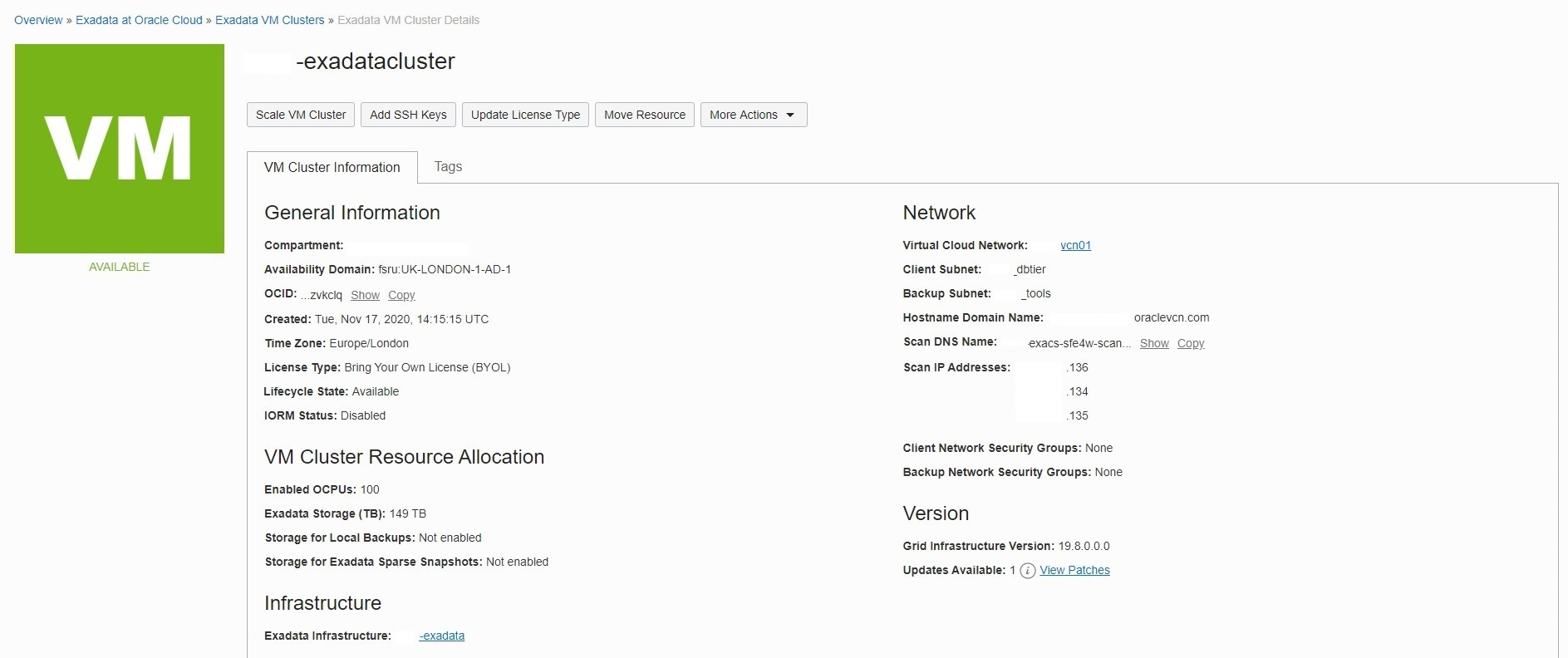

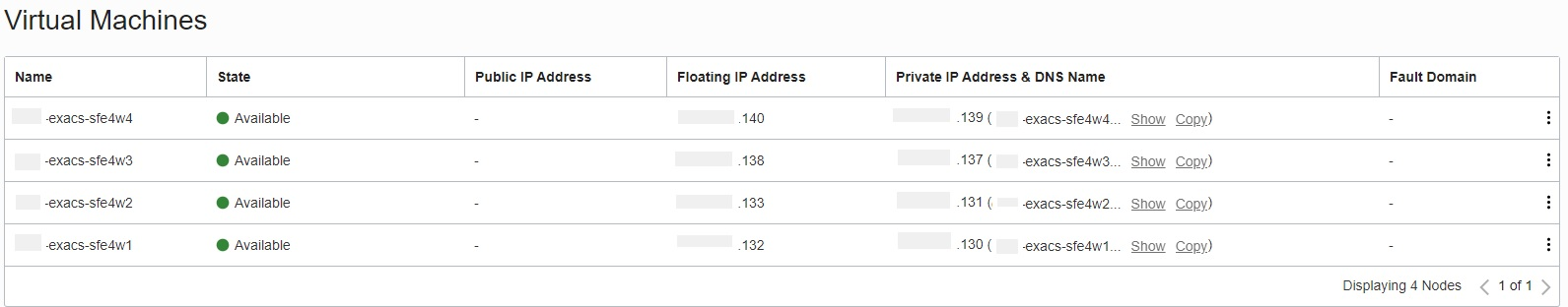

I will be adding two more compute nodes to my X8M cloud service which looks like this now:

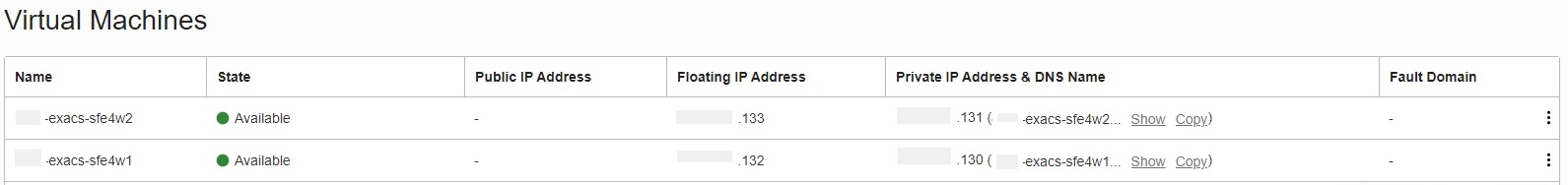

To add more compute nodes or storage you go to Exadata Infrastructure resource and at the top left corner there is Scale Infrastructure button which brings the following screen. Here you can choose whether you want to add compute or storage server and how many, you can add only one type of resource at a time:

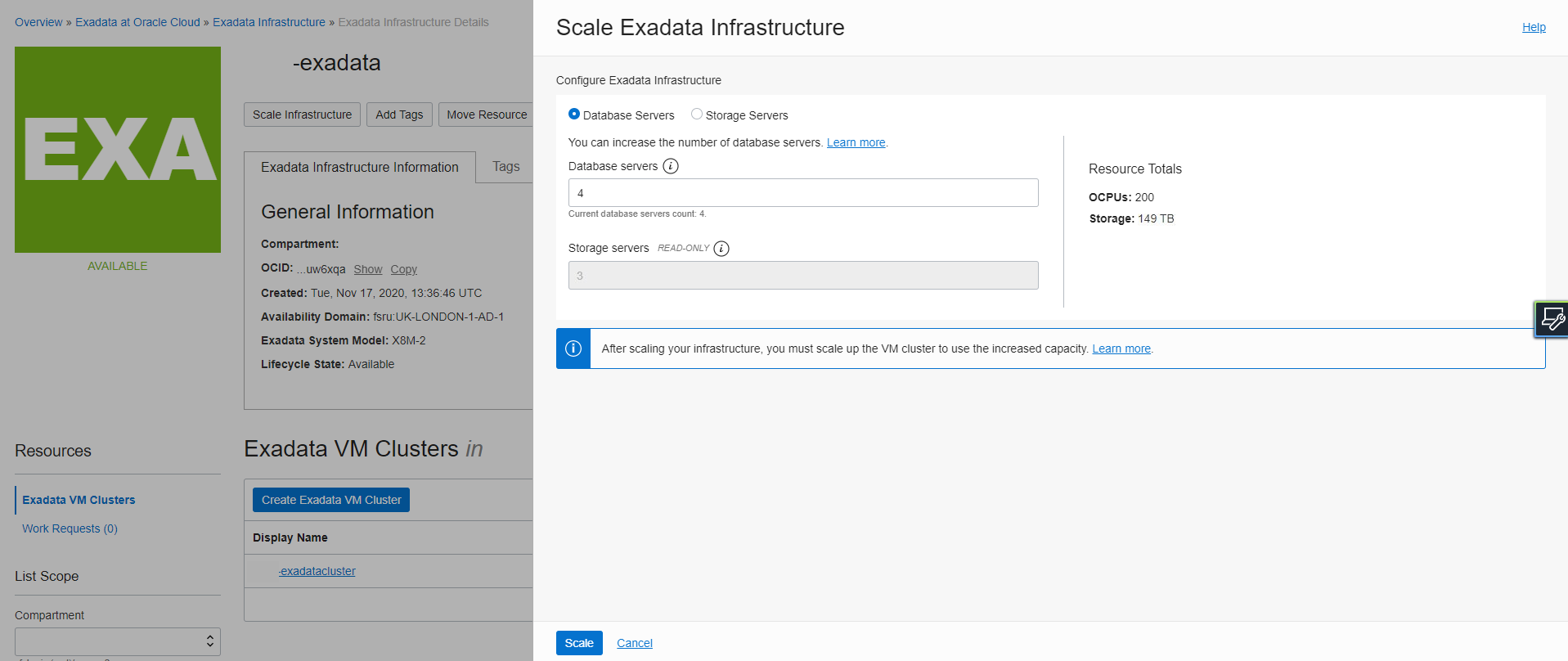

The operation completes immediately on the Exadata Infrastructure resource and the VM Cluster resource moves to Updating state. Like any other cloud resource, you can't run any other operations during this state and you need to wait for its completion:

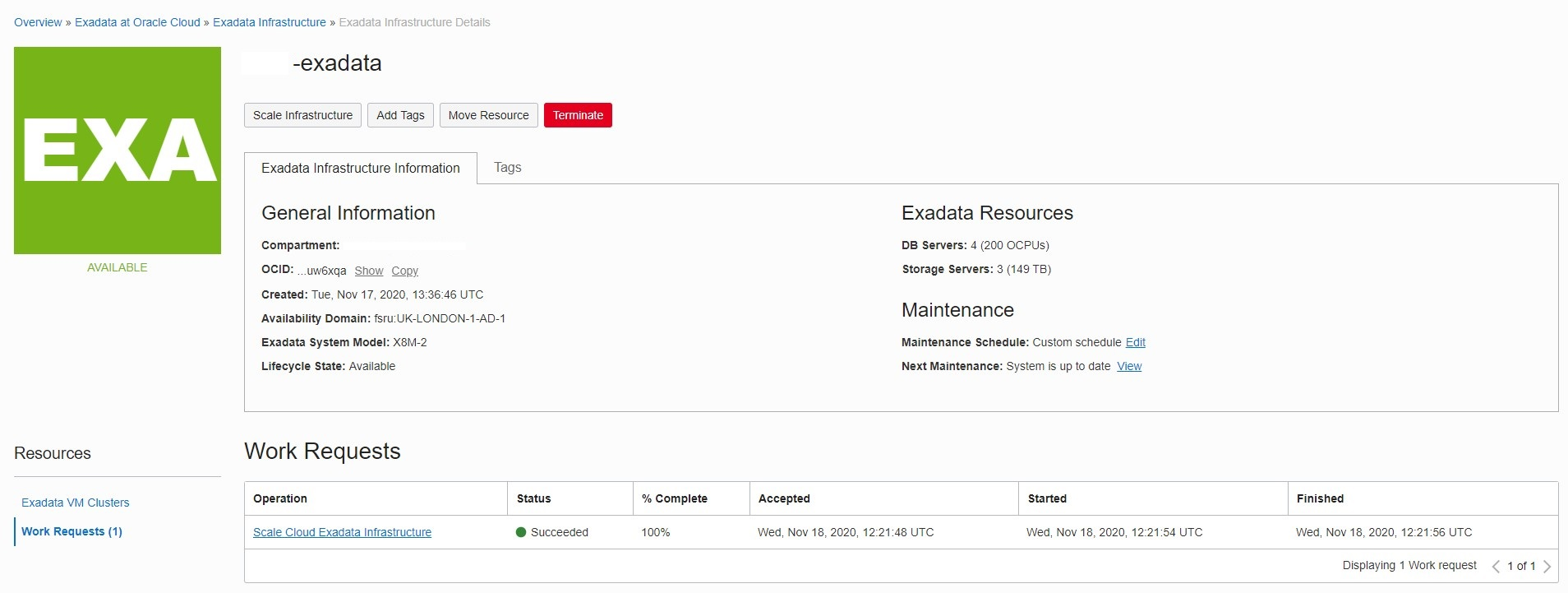

The process will add the two compute nodes to the cluster, one at the time. It takes around 2 hours per compute nodes and it's fully automated. By the time it completes you will have all four compute nodes under Virtual Machines and their details:

And that's all, quick and painless, complete in less than 5 hours.

Next week I'll be scaling the infrastructure with more storage nodes. Stay tuned!