Provisioning Exadata Cloud Service X8M

About a month ago Oracle released Exadata X8M in Oracle Cloud Infrastructure. Except for finally getting X8M in the cloud the major feature of this release is the ability to provision elastic configurations. That's not a new Exadata feature and I've used it many times in the past. However, it's new for OCI and it makes cloud even more desirable platform because now you can get any shape of Exadata. What makes this possible is the new X8M feature RoCE that replaces the traditional Infiniband fabric with ethernet. With previous generations of Exadata where Infiniband was used there was a limitation that the racks could not be more than 100m apart. That's fine if you a small shop with one or two Exadata systems but it's almost impossible for hyperscaler like Oracle to provide flexibility and elasticity to end customers using this approach. The new X8M configuration doesn't use Infiniband switches anymore but 36-port Cisco Nexus 9336c Ethernet switches and RDMA over Converged Ethernet (RoCE) set of protocols. In other words, we still run Infiniband Remote Direct Memory Access (RDMA) but over ethernet.

Back to cloud, till now Oracle offered quarter, half and full racks shapes only. That wasn't a great choice for many customers, including for the one I'm working at the moment. Customer already had an X7 half rack in OCI and wanted to provision a new Exadata environment for performance testing in OCI. Problem was that we needed about 220TB of usable space in DATA diskgroup and with half rack we get around 240TB. That would have put us in very difficult position in the future when databases grow and was a tough decision to make. Fortunately, at that time Oracle announced the release of X8M in the OCI that provides so much desired elastic configurations. We can now provision the same number of compute nodes and few more storage cells to fulfil the storage requirements.

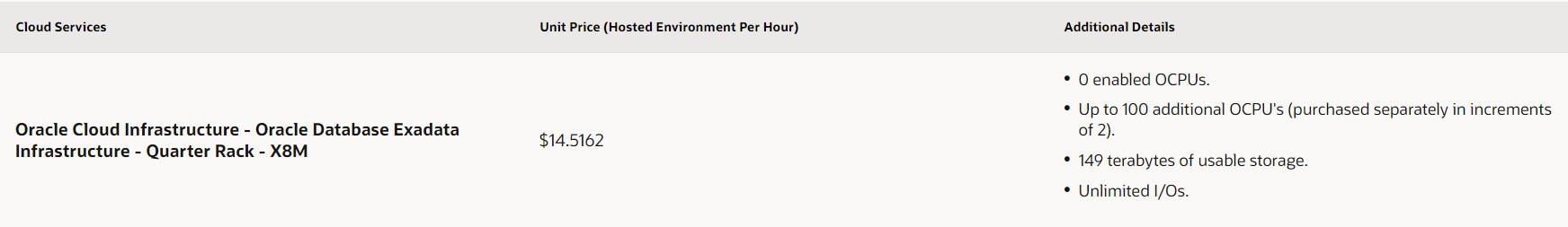

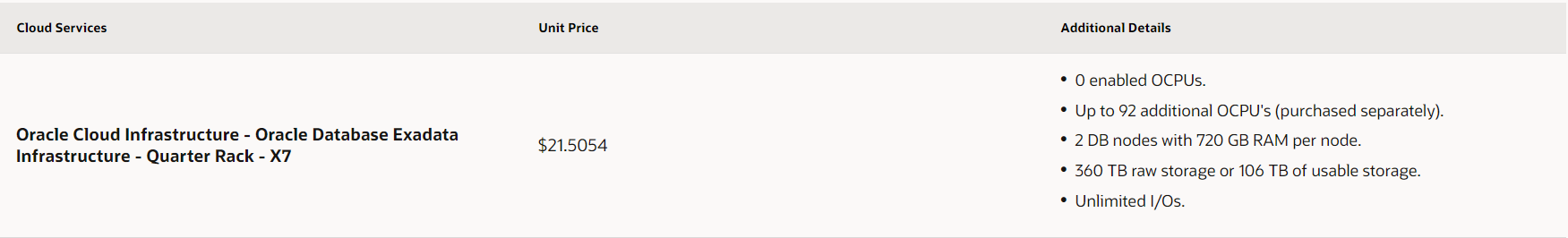

Comparing the Exadata Cloud service X7 and X8M shapes one could not notice the difference in CPU and usable disk space:

- Exadata.Quarter2.92 (x7): Provides a 2-node Exadata DB system with up to 92 CPU cores, and 106 TB of usable storage.

- Exadata.Quarter3.100 (X8): Provides a 2-node Exadata DB system with up to 100 CPU cores, and 149 TB of usable storage.

That's expected, the X8 has newer CPUs with more cores and bigger disks on the storage cells (10TB compared to 14TB). However, what is not expected is the price difference between these two systems:

That's good saving of $7000 per month. The price is a reason good enough to go for X8M, let alone the more CPUs, more space and features like PMEM and RoCE available on X8M.

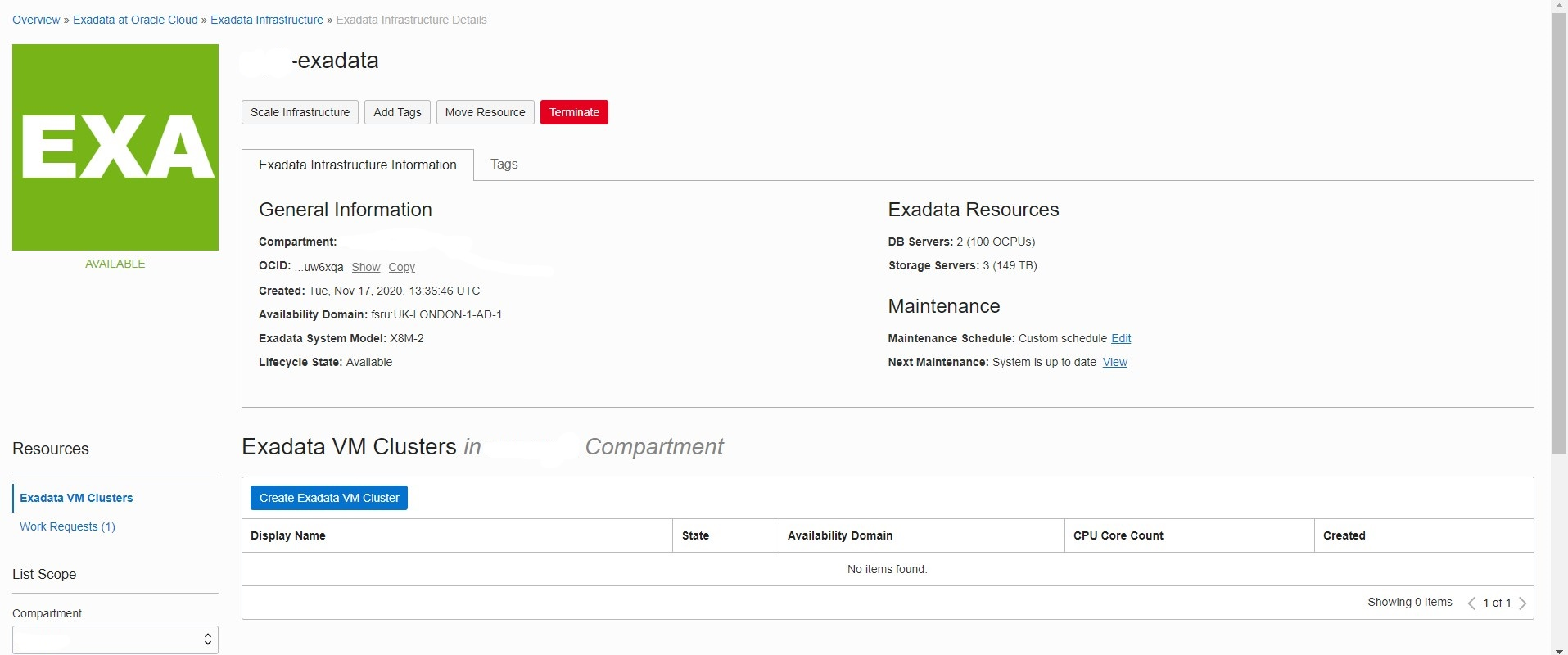

Now, to the more interesting stuff. The new X8M shape is using the new Resource Model and the system is not available under DB System anymore. Instead, the Exadata system is now provisioned under Exadata Infrastructure, this works as a top-level resource where one manages the infrastructure, that is the number of compute nodes and storage cells. Then there is the VM Cluster resource which is child resource of the infrastructure one and that's where database homes, databases, networking and cluster details reside. In short, under Exadata Infrastructure you scale out the physical resources (compute and storage) and schedule the upgrade of the Exadata Storage Software. Then under VM Clusters you scale up the existing compute nodes and schedule the upgrade of the Grid Infrastructure. It will become more clearer in the screenshots below.

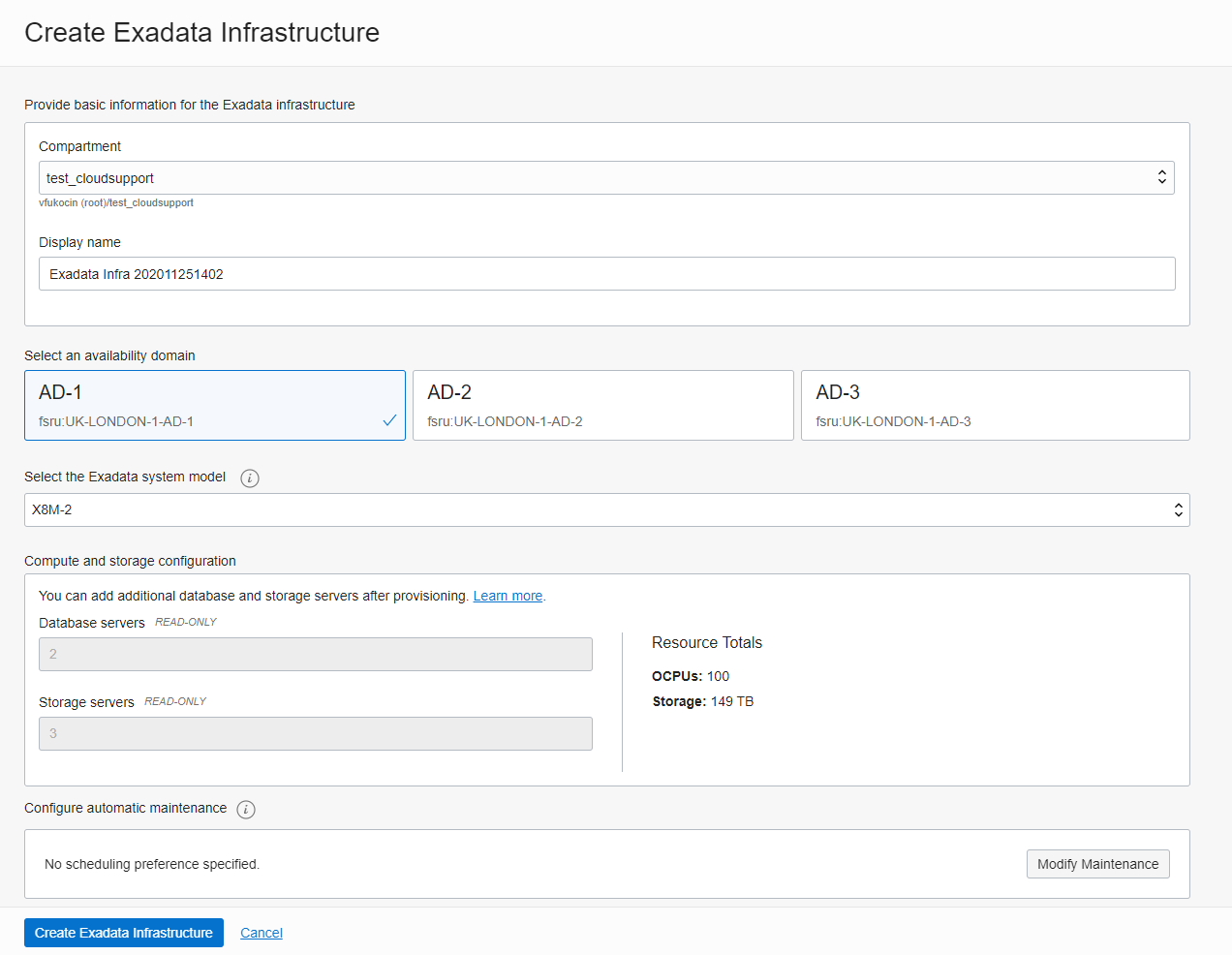

Provisioning Exadata Infrastructure

The new resource model has two resource types - Exadata Infrastructure and VM Clusters. Respectively one needs to provision each separately, starting with Exadata Infrastructure:

The initial Exadata Cloud Service instance will have 2 database servers and 3 storage servers by default, that's the equivalents of an X8 quarter rack. After provisioning, additional storage servers, compute servers can be dynamically added to the configuration.

The provisioning is instantaneous as there are no physical resources yet but this new resource works as a parent for the VM Cluster that will be provisioned next. The only option available here is setting a preference of the maintenance schedule but the same can be changed later.

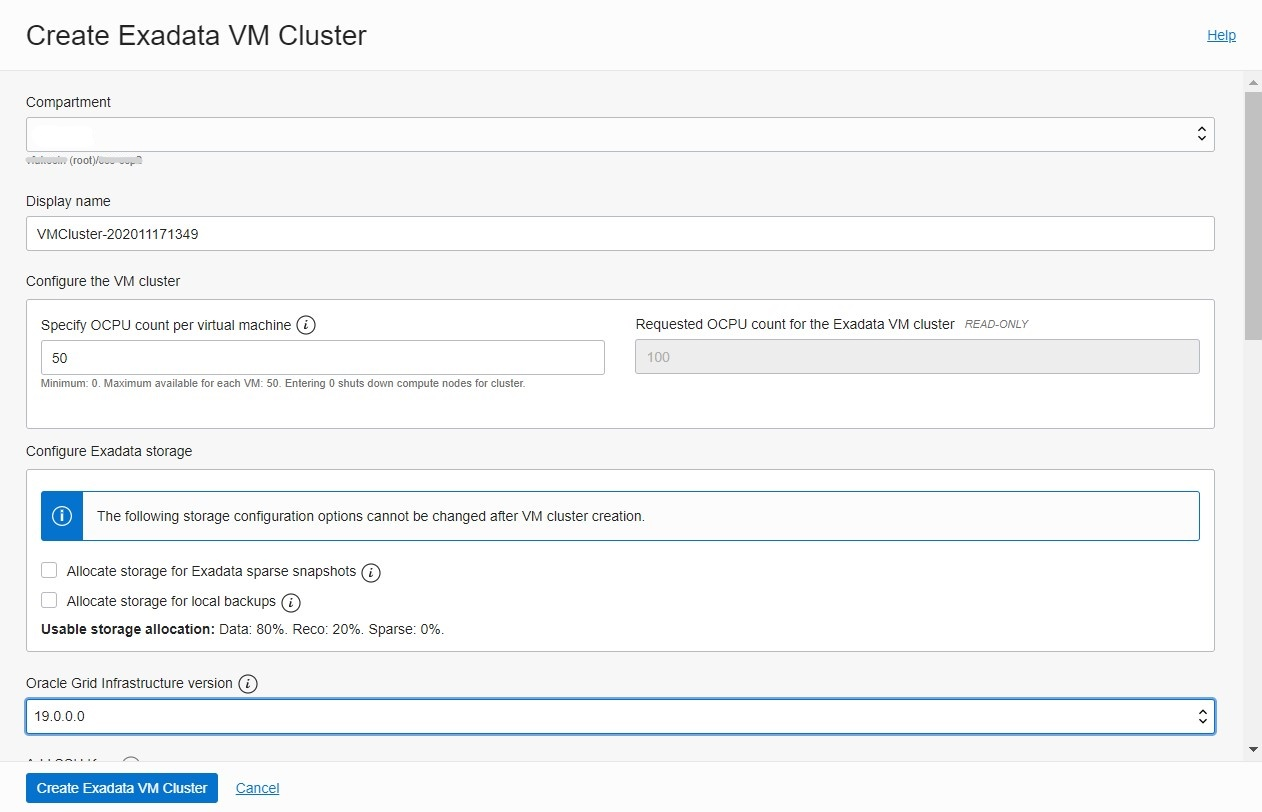

Provisioning VM Cluster

Moving on to the VM Cluster resource, that's where actual provisioning happens. The process is similar to the previous generation Exadata provisioning - you need to specify the cluster name, how many of the CPU cores you want to assign to this cluster, whether you want sparse group and/or local backups. There's an option to choose the Grid Infrastructure version but the only available at the moment is 19c. The other options you specify are client and backup subnets, hostname prefix, time zone and type of licenses you want to use.

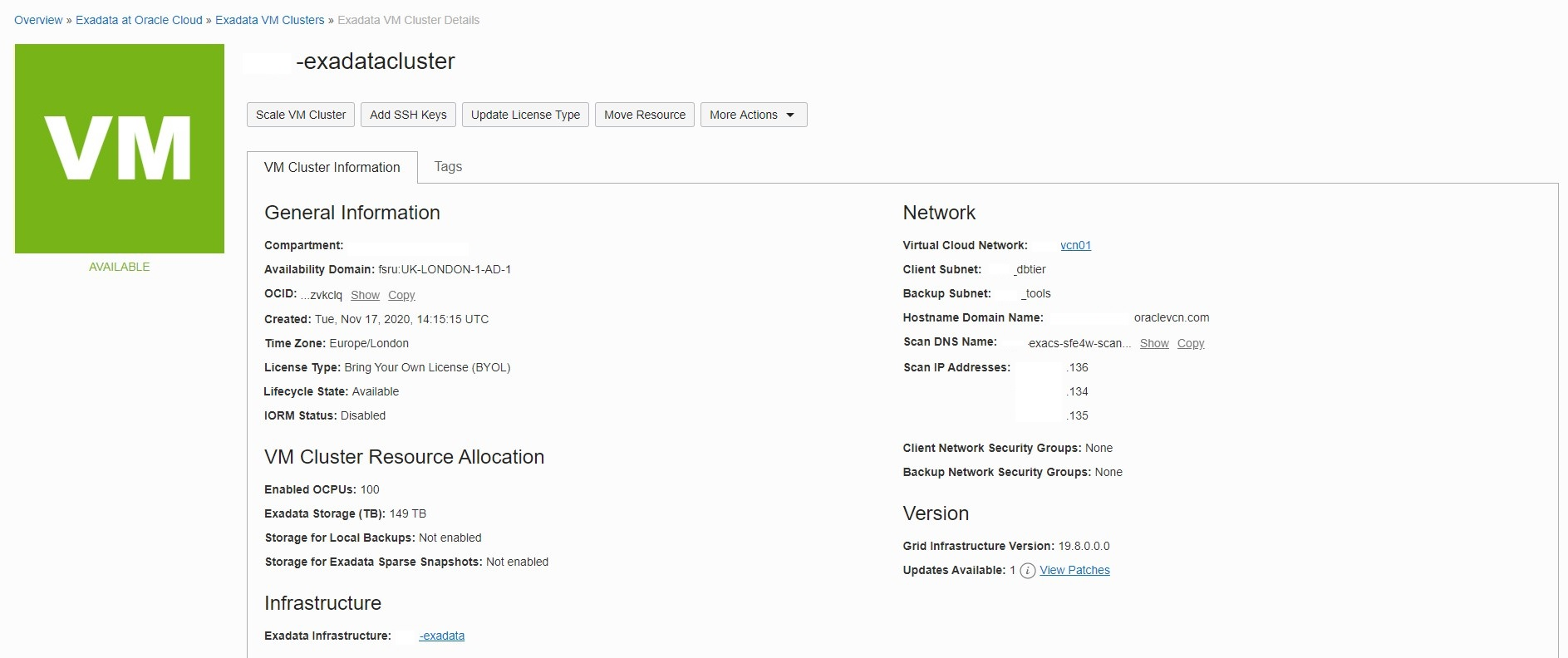

The process takes few hours to complete and then you get the cluster up and running ready to host databases:

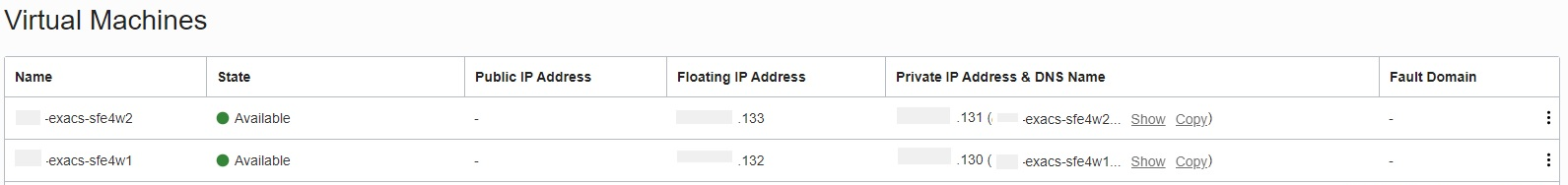

The databases/compute nodes are referred as virtual machines now, the tab looks exactly the same as before:

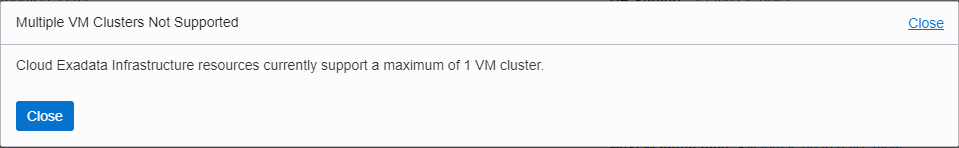

Interestingly however, if you try provisioning another VM Cluster you get this message:

This suggests that we might get the option to provision more clusters under the same infrastructure. That already available for on-premises Exadata for few years now and it is natural to come to cloud at some point, similarly to how elastic configurations are now available.

The newly provisioned system runs September release of Exadata Storage Software:

Kernel version: 4.14.35-1902.304.6.4.el7uek.x86_64 #2 SMP Tue Aug 4 12:36:50 PDT 2020 x86_64

Image kernel version: 4.14.35-1902.304.6.4.el7uek

Image version: 20.1.2.0.0.200930

Image activated: 2020-11-17 15:04:40 +0000

Image status: success

Node type: GUEST

System partition on device: /dev/mapper/VGExaDb-LVDbSys1

While I was writing this post I noticed a new version 20.1.4.0.0 was released to fix a critical bug in 20.1.3.0.0. We'll see how long before we get this update available in the cloud and will blog about it.

The other thing to note, which is also a significant change for Exadata platform is the change in the directory structure:

[root@test-exacs-sfe4w1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 684G 0 684G 0% /dev

tmpfs 1.4T 209M 1.4T 1% /dev/shm

tmpfs 684G 5.3M 684G 1% /run

tmpfs 684G 0 684G 0% /sys/fs/cgroup

/dev/mapper/VGExaDb-LVDbSys1 61G 6.0G 56G 10% /

/dev/mapper/VGExaDb-LVDbTmp 3.0G 104M 2.9G 4% /tmp

/dev/mapper/VGExaDb-LVDbHome 4.0G 58M 4.0G 2% /home

/dev/mapper/VGExaDb-LVDbOra1 250G 5.1G 245G 3% /u01

/dev/mapper/VGExaDbDisk.grid19.0.0.0.200714.img-LVDBDisk 20G 14G 6.4G 69% /u01/app/19.0.0.0/grid

/dev/mapper/VGExaDb-LVDbVar1 10G 1.2G 8.9G 12% /var

/dev/mapper/VGExaDbDisk.u02_extra.img-LVDBDisk 763G 163G 562G 23% /u02

/dev/mapper/VGExaDb-LVDbVarLog 30G 409M 30G 2% /var/log

/dev/mapper/VGExaDb-LVDbVarLogAudit 10G 150M 9.9G 2% /var/log/audit

/dev/sda1 509M 84M 425M 17% /boot

tmpfs 137G 0 137G 0% /run/user/0

tmpfs 137G 0 137G 0% /run/user/2000

/dev/asm/acfsvol01-292 1.1T 125G 996G 12% /acfs01

tmpfs 137G 0 137G 0% /run/user/12138

In next posts I will go through infrastructure scale (compute and storage), Infrastructure and GI patching, cloning databases off object storage on to the new system and all the required steps to get them in cloud UI.

Here's the cloud service data sheet:

Oracle Exadata Cloud Service X8M data sheet

If you are interested of what's behind the scenes here's the X8M-2 data sheet:

Oracle Exadata X8M-2 data sheet

Link to Exadata Cloud Service documentation

The following page describes the differences between X8-2 and X8M-2.